Verifiable MCP Servers

Imagine giving house keys to a stranger who promises to water your plants. They seem trustworthy, but there's no way to verify what they're doing inside the home. This is precisely the trust dilemma with the rapidly growing ecosystem of Model Context Protocol (MCP) servers – they hold the keys to agentic interactions with data and software, but users can't see what's happening behind closed doors.

What is MCP?

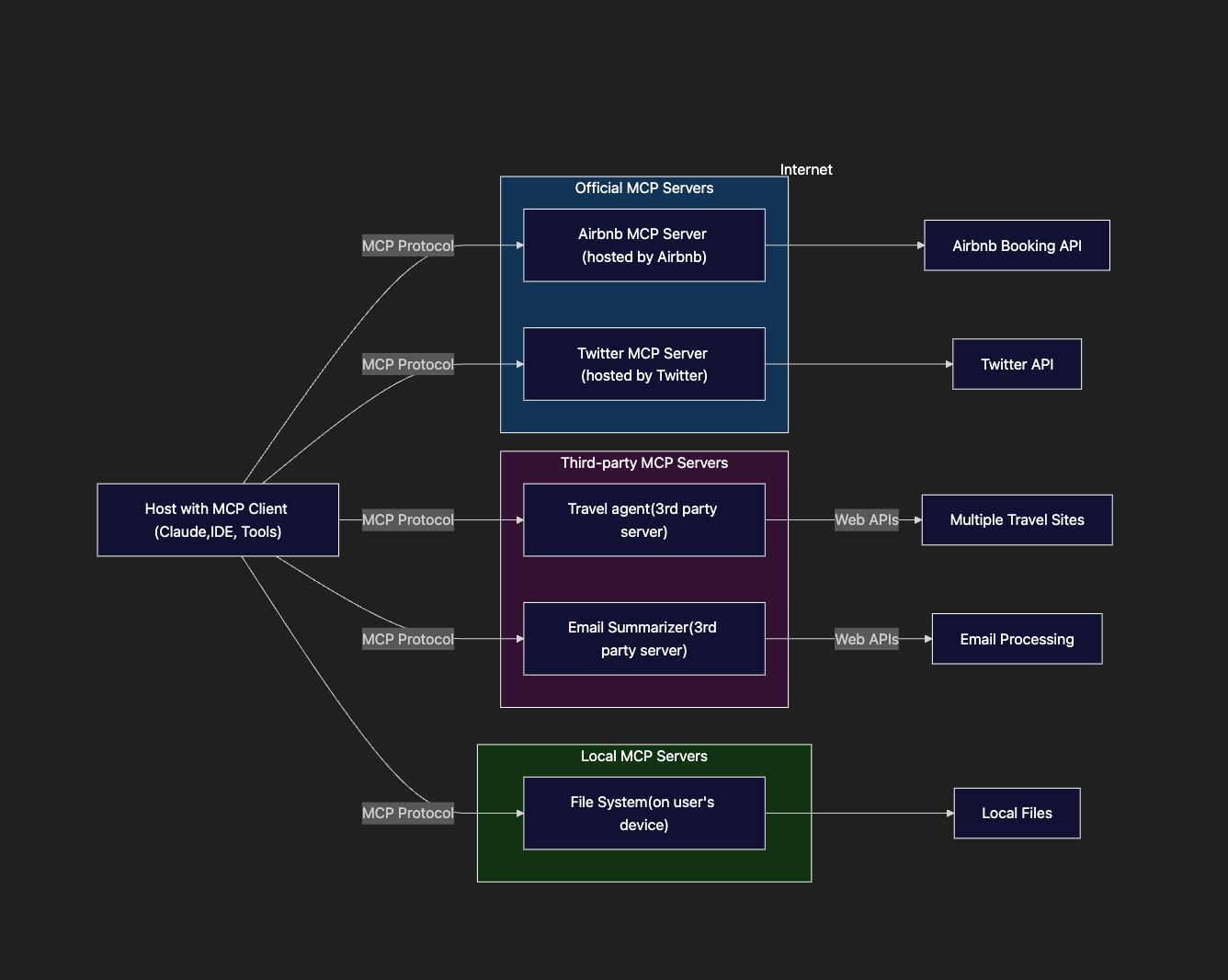

The Model Context Protocol by Anthropic is a standardized communication protocol that allows AI agents to securely connect with external tools, services, and resources. It creates a structured pathway for agents to discover tools, request actions, and receive responses – essentially giving agents the ability to take concrete actions in the digital world.

Rather than being confined to conversation, AI assistants using MCP can send emails, deploy code, access APIs, and interact meaningfully with user data. The protocol acts as a universal translator between AI systems and the digital services people rely on daily.

The MCP ecosystem is evolving along three primary paths. Larger companies like Airbnb and DoorDash are building their own MCP servers, though this requires significant investment. Individual developers can run MCP servers locally, providing tight control but limited scale. However, the approach gaining the most traction involves third-party providers hosting MCP servers that any developer can use, creating a flourishing ecosystem of specialized tools.

Problem: Trusting third-party MCP servers

The rapid proliferation of third-party MCP servers has created a serious trust problem. These servers require deep access to digital lives – they need API keys, OAuth tokens, and other sensitive credentials to function. But there's no assurance they're not silently harvesting data, manipulating requests, or exposing credentials to attackers.

This isn't merely a theoretical concern. MCP servers function as privileged intermediaries between AI assistants and sensitive digital systems. They have unfettered access to credentials that could be silently copied, stored insecurely, or exfiltrated. Users have virtually no visibility into what's actually running on these servers – a server might claim to perform one function while secretly engaging in data mining or other unauthorized activities.

Even when server code is open-sourced, one must ask "What am I actually running?". This problem has plagued software security for decades. There's no guarantee that the published code matches what's running in production. Server operators can modify behavior without users' knowledge, turning benign tools into Trojan horses.

Beyond security concerns, fairness and consistency issues emerge. Without verification mechanisms, users cannot know whether their requests are being processed fairly or if the server is engaging in selective discrimination. Meanwhile, sensitive information processed by these servers remains accessible to operators and potentially vulnerable to breaches.

Tool Poisoning Attacks

Tool poisoning attacks are a recently demonstrated critical vulnerability where malicious instructions are embedded within MCP tool descriptions, invisible to users but visible to AI models. These hidden instructions can manipulate models into performing unauthorized actions without user awareness.

In a tool poisoning attack, malicious directives embedded in tool descriptions can command the model to access sensitive system files, extract private data, or perform actions that appear legitimate while having hidden harmful effects. The danger lies in an asymmetry of information where users see only a sanitized interface while models receive and faithfully execute the complete instructions.

As agents perform actions on increasingly sensitive systems through MCP – from financial applications to healthcare data – this trust gap creates not just theoretical vulnerabilities but practical dangers that could impact millions of users. Without solving this fundamental trust problem, the full potential of AI assistance will remain constrained by legitimate security concerns.

Verifiable MCP servers in TEEs

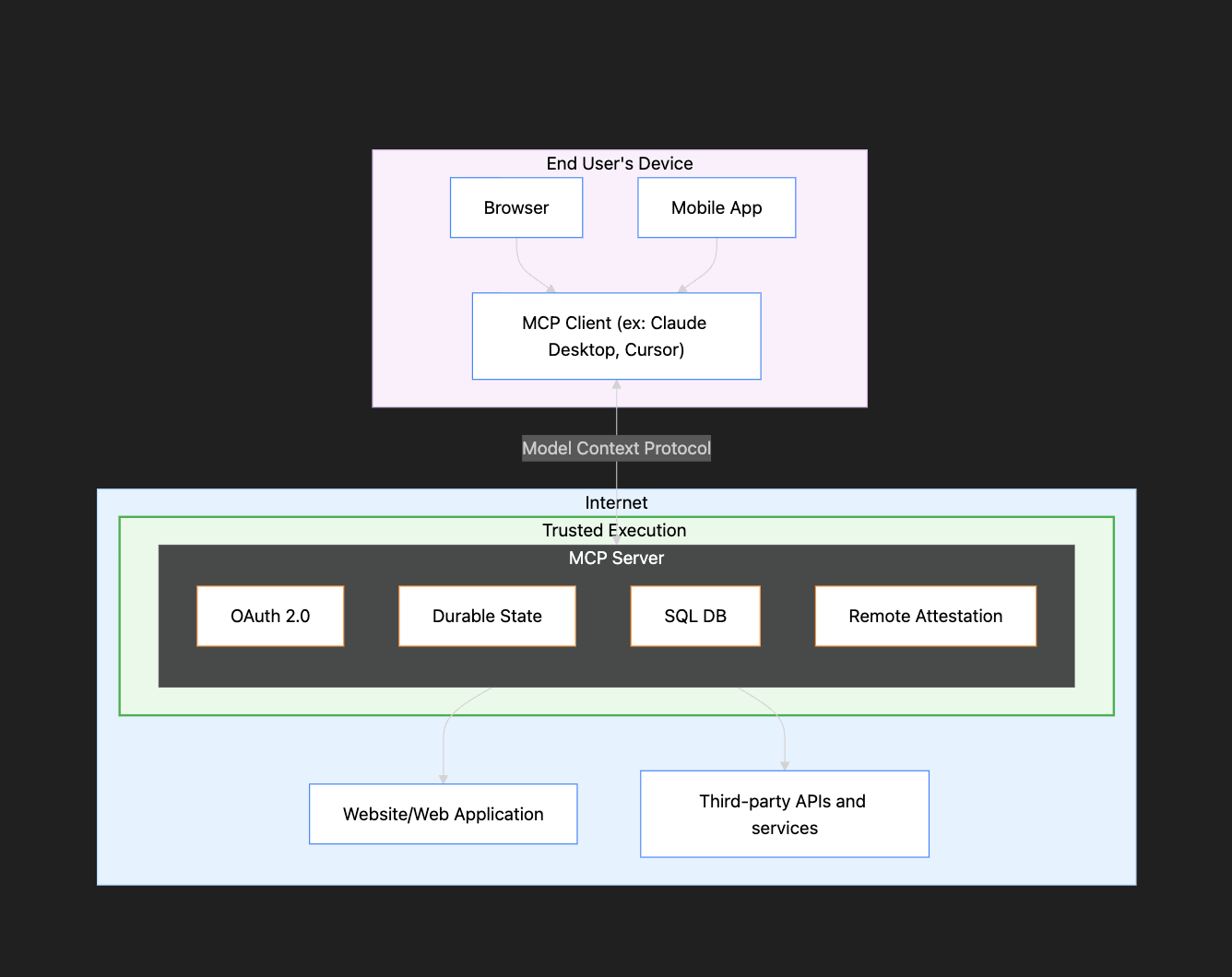

One solution to this trust dilemma is using Trusted Execution Environments (TEEs). By deploying MCP servers within TEEs, secure hardware can guarantee the MCP server’s execution.

TEEs provide hardware-level isolation; inside this protected environment, the MCP server operates within a fortress of encryption, with its memory and operations shielded even from system administrators. The TEE produces cryptographic proof through remote attestation that the correct, unmodified code is running, allowing clients to verify they're communicating with the expected server. As long as the MCP server codebase is public, the cryptographic hash of its codebase can be checked against the hash attested to by the hardware.

This approach addresses each of the trust concerns that plague traditional MCP servers. Credentials and user information processed within the TEE remain encrypted in memory and inaccessible. Sensitive authentication information can be securely zeroized after use, leaving no traces. The TEE guarantees that all requests are processed according to the same rules, preventing discrimination while generating cryptographic proofs of server behavior without compromising private data.

Perhaps most importantly for fighting tool poisoning attacks, the TEE ensures that tool descriptions match exactly what was approved and published. This makes it impossible to inject hidden malicious instructions, as any modification would immediately reflect in the attestation's code hash field.

By placing the MCP server within a TEE, users don't need to trust the server operator – the hardware guarantees security and proper execution, enabling high-stakes applications like financial transactions, identity verification, voting systems, and resource allocation.

Beyond security advantages

The benefits of verifiable MCP servers in TEEs extend beyond security advantages for users. For websites and service providers, this approach creates unique opportunities to participate in the AI ecosystem without building their own infrastructure.

Services don't need to build and maintain their own MCP servers, yet can benefit from AI agents being able to interact with their platforms. Existing APIs can be exposed as tool calls using MCP. Third parties can develop specialized MCP servers with unique capabilities like cross-platform integrations that the original service might not prioritize. This model enables selective endorsement, where services can formally verify and endorse specific third-party MCP servers without building their own. With TEE verification ensuring proper credential handling and execution, services face lower risks from third-party integrations than from unverified intermediaries. Market testing becomes possible as services observe which third-party MCP servers gain traction, helping identify valuable features before investing in official servers.

For smaller services that couldn't justify building their own MCP servers, verifiable third-party servers provide an entry point into the AI agents ecosystem. Rather than dealing with unique security concerns for each AI integration, services can rely on the standard security guarantees provided by verified TEE-based MCP servers.

Conclusion

Today's MCP server landscape resembles aspects of the early internet before HTTPS became standard. While not a perfect comparison, both environments share a critical gap: users must blindly trust intermediaries with sensitive information without verification mechanisms. TEE-based verification offers a path forward, providing cryptographic assurance rather than relying solely on reputation. Much as HTTPS helped enable secure financial transactions online, verifiable MCP servers can unlock AI access to sensitive systems that would otherwise remain off-limits due to legitimate security concerns.

To move this approach from theory to practice, an experimental Gmail MCP server running in a TEE is being released alongside this post. This implementation demonstrates how AI agents can securely access sensitive services while providing mathematical guarantees of credential protection and data privacy. With verifiable execution, the next generation of AI tools can be both powerful and trustworthy by design.